Toward this end, Marcus Noack, a postdoctoral scholar at Lawrence Berkeley National Laboratory in the Center for Advanced Mathematics for Energy Research Applications (CAMERA), and James Sethian, director of CAMERA and Professor of Mathematics at UC Berkeley, have been working with beamline scientists at Brookhaven National Laboratory to develop and test SMART (Surrogate Model Autonomous expeRimenT), a mathematical method that enables autonomous experimental decision making without human interaction. A paper describing SMART and its application in experiments at Brookhaven’s National Synchrotron Light Source II (NSLS-II) was published August 14, 2019 in Nature Scientific Reports.

“Modern scientific instruments are acquiring data at ever-increasing rates, leading to an exponential increase in the size of data sets,” said Noack, lead author on that paper. “Taking full advantage of these acquisition rates requires corresponding advancements in the speed and efficiency not just of data analytics but also experimental control.”

The goal of many experiments is to gain knowledge about the material that is studied, and scientists have a well-tested way to do this: they take a sample of the material and measure how it reacts to changes in its environment. User facilities such as Brookhaven’s NSLS-II and the Center for Functional Nanomaterials (CFN) offer access to high-end materials characterization tools. The associated experiments are often lengthy, and complicated procedures and measurement time is precious. A research team might only have a few days to measure their materials, so they need to make the most of each step in each measurement.

“A standard approach for users at light sources like the NSLS-II is to manually and exhaustively scan through a sample,” Noack said. “But if you assume the data set is 3D or higher dimensional, at some point this exercise becomes intractable. So what is needed is something that can automatically tell me where I should take my next measurement.”

Using Gaussian Process Regression for Intelligent Data Collection

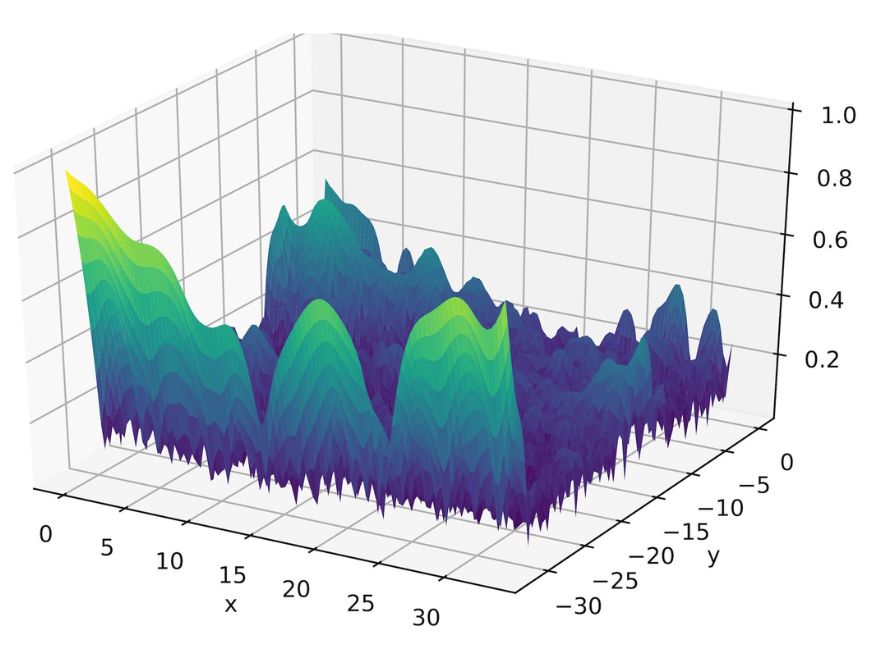

Noack joined Berkeley Lab two years ago to bring mathematics into the design and optimization of experiments, with the ultimate goal of enabling autonomous experiments. The result is SMART, a Python-based algorithm that automatically selects measurements from an experiment and exploits Gaussian process regression (aka Kriging) to construct a surrogate model and an error function based on the available experimental data. Mathematical function optimization is then used to explore the error function to find the maximum error and suggest the location for the next measurement. The result is a mathematically rigorous and compact approach to systematically perform optimally efficient experiments.

“People have been doing intelligent data collection for a long time, but for beamline scientists this is the first application of the most sophisticated generation of Gaussian processes,” said Sethian, a co-author on the Nature Scientific Reports paper. “By exploiting Gaussian processes, approximation theory, and optimization, Marcus has designed a framework to bring autonomous optimized modeling and AI to beamline science.”

In practice, before starting an experiment, the scientists provide SMART with a set of goals they want to get out of the experiment. The raw data is sent to an automated-analysis software, usually available at beamlines, and then handed to the SMART decision-making algorithm. To determine the next measurement, the algorithm creates a surrogate model of the data, which is comparable to an educated guess on how the material will behave in the next possible steps and calculates the uncertainty – basically how confident it is in its guess – for each possible next step. Based on this, it then selects the most uncertain option to measure next. The trick here is that by picking the most uncertain step to measure next, it maximizes the amount of knowledge it gains by measuring it. The algorithm also defines when to end the experiment by figuring out the moment when any additional measurements would yield no further new knowledge about the material.

“The basic idea is, given a bunch of experiments, how can you automatically pick the next best one?” said Sethian. “Marcus has built a world which builds an approximate surrogate model on the basis of your previous experiments and suggests the best or most appropriate experiment to try next.”

“The final goal is not only to take data faster but also to improve the quality of the data we collect,” said Kevin Yager, co-author and CFN scientist. “I think of it as experimentalists switching from micromanaging their experiment to managing at a higher level. Instead of having to decide where to measure next on the sample, the scientists can instead think about the big picture, which is ultimately what we as scientists are trying to do.”

Enabling Autonomous X-Ray Scattering Experiments at NSLS-II

In experiments run at NSLS-II, the collaborators used SMART to demonstrate autonomous experiments using x-ray scattering. The first experimental setup was on NSLS-II’s Complex Materials Scattering (CMS) beamline, which offers ultrabright x-rays to study the nanostructure of different materials. For their first fully autonomous experiment, the team imaged the thickness of a droplet of nanoparticles using a technique called small-angle x-ray scattering at the CMS beamline. After their initial success, they reached out to other users and proposed having them test SMART on their scientific problems. Since then they have measured a number of samples, Yager noted.

“This is an exciting part of this collaboration,” said Masafumi Fukuto, co-author of the study and scientist at NSLS-II. “We all provided an essential piece for it: the CAMERA team worked on the decision-making algorithm, Kevin from CFN worked on the real-time data analysis, and we at NSLS-II provided the automation for the measurements.”

While the code has been shown to be stable and working well, Noack is making improvements to SMART to make it more powerful, run faster on more measurements, and be less computationally expensive. In the meantime, scientists at other beamlines are expressing interest in using SMART, and new experiments are already scheduled at Brookhaven and Berkeley Lab’s Advanced Light Source.

SMART isn’t intended just for beamline experiments, however. “SMART is implemented in a way that has nothing to do with a beamline,” Noack said. “If you want to explore a space where your data lives, all you need to know is how big that space is, that it has a beginning and an end in every dimension, and you can press SMART and it will give you everything. As long as you put numbers on it and a number on how much you like it, generally any experiment will work with SMART.”

The National Synchrotron Light Source II and the Center for Functional Nanomaterials are DOE Office of Science User Facilities.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Please be aware that this historical content might mention programs, people, and research that aren’t currently active at Berkeley Lab, links to web pages that don’t work anymore, or documents that aren’t available. We’ve preserved this information just the way it was, so that others can learn more about the past.